Papers, Presentations, etc.

What did you read in 2015?

Another year has passed and academic platform bombard us with end-of-year summaries. So, here are the most-read HLP Lab papers of 2015. Congratulations to Dave Kleinschmidt, who according to ResearchGate leads the 2015 HLP Lab pack with his beautiful paper on the ideal adapter framework for speech perception, adaptation, and generalization. The paper was cited 22 times in the first 6 months of being published! Well deserved, I think … as a completely neutral (and non-ideal) observer ;).

Academia.edu mostly agreed, Read the rest of this entry »

The (in)dependence of pronunciation variation on the time course of lexical planning

Language, Cognition, and Neuroscience just published Esteban Buz’s paper on the relation between the time course of lexical planning and the detail of articulation (as hypothesized by production ease accounts).

Several recent proposals hold that much if not all of explainable pronunciation variation (variation in the realization of a word) can be reduced to effects on the ease of lexical planning. Such production ease accounts have been proposed, for example, for effects of frequency, predictability, givenness, or phonological overlap to recently produced words on the articulation of a word. According to these account, these effects on articulation are mediated through parallel effects on the time course of lexical planning (e.g., recent research by Jennifer Arnold, Jason Kahn, Duane Watson, and others; see references in paper).

This would indeed offer a parsimonious explanation of pronunciation variation. However, the critical test for this claim is a mediation analysis, Read the rest of this entry »

CUNY 2015 plenary

As requested by some, here are the slides from my 2015 CUNY Sentence Processing Conference plenary last week:

I’m posting them here for discussion purposes only. During the Q&A several interesting points were raised. For example Read the rest of this entry »

HLP Lab at CUNY 2015

We hope to see y’all at CUNY in a few weeks. In the interest of hopefully luring to some of our posters, here’s an overview of the work we’ll be presenting. In particular, we invite our reviewers, who so boldly claimed (but did not provide references for the) triviality of our work ;), to visit our posters and help us mere mortals understand.

- Articulation and hyper-articulation

- Unsupervised and supervised learning during speech perception

- Syntactic priming and implicit learning during sentence comprehension

- Uncovering the biases underlying language production through artificial language learning

Interested in more details? Read on. And, as always, I welcome feedback. (to prevent spam, first time posters are moderated; after that your posts will always directly show)

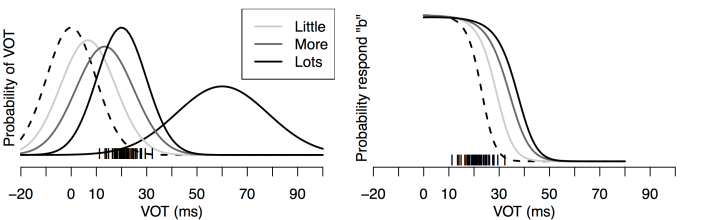

Speech recognition: Recognizing the familiar, generalizing to the similar, and adapting to the novel

At long last, we have finished a substantial revision of Dave Kleinschmidt‘s opus “Robust speech perception: Recognize the familiar, generalize to the similar, and adapt to the novel“. It’s still under review, but we’re excited about it and wanted to share what we have right now.

The paper builds on a large body of research in speech perception and adaptation, as well as distributional learning in other domains to develop a normative framework of how we manage to understand each other despite the infamous lack of invariance. At the core of the proposal stands the (old, but often under-appreciated) idea that variability in the speech signal is often structured (i.e., conditioned on other variables in the world) and that an ideal observer should take advantage of that structure. This makes speech perception a problem of inference under uncertainty at multiple different levels Read the rest of this entry »

HLP Lab and collaborators at CMCL, ACL, and CogSci

The summer conference season is coming up and HLP Lab, friends, and collaborators will be presenting their work at CMCL (Baltimore, joint with ACL), ACL (Baltimore), CogSci (Quebec City), and IWOLP (Geneva). I wanted to take this opportunity to give an update on some of the projects we’ll have a chance to present at these venues. I’ll start with three semi-randomly selected papers. Read the rest of this entry »

Presentation at CNS symposium on “Prediction, adaptation and plasticity of language processing in the adult brain”

Earlier this week, Dave Kleinschmidt and I gave a presentation as part of a mini-symposium at Cognitive Neuroscience Conference on “Prediction, adaptation and plasticity of language processing in the adult brain” organized by Gina Kuperberg. For this symposium we were tasked to address the following questions:

- What is prediction and why do we predict?

- What is adaptation and why do we adapt?

- How do prediction and adaptation relate?

Although we address these questions in the context of language processing, most of our points are pretty general. We aim to provide intuitions about the notions of distribution, prediction, distributional/statistical learning and adaptation. We walked through examples of belief-updating, intentionally keeping our presentation math-free. Perhaps some of the slides are of interest to some of you, so I attached them below. A more in-depth treatment of these questions is also provided in Kleinschmidt & Jaeger (under review, available on request).

Comments welcome. (sorry – some of the slides look strange after importing them and all the animations got lost but I think they are all readable).

It was great to see these notions discussed and related to ERP, MEG, and fMRI research in the three other presentations of the symposium by Matt Davis, Kara Federmeier and Eddy Wlotko, and Gina Kuperberg. You can read their abstracts following the link to the symposium I included above.

Another example of recording spoken productions over the web

A few days ago, I posted a summary of some recent work on syntactic alignment with Kodi Weatherholtz and Kathryn Campell-Kibler (both at The Ohio State University), in which we used the WAMI interface to collect speech data for research on language production over Amazon’s Mechanical Turk.

Socially-mediated syntactic alignment

The first step in our OSU-Rochester collaboration on socially-mediated syntactic alignment has been submitted a couple of weeks ago. Kodi Weatherholtz in Linguistics at The Ohio State University took the lead in this project together with Kathryn Campbell-Kibler (same department) and me.

We collected spoken picture descriptions via Amazon’s crowdsourcing platform Mechanical Turk to investigate how social attitude towards an interlocutor and conflict management styles affected syntactic priming. Our paradigm combines Read the rest of this entry »

Join me at the 15th Texas Linguistic Society conference?

I’ll be giving a plenary presentation at the 15th Texas Linguistic Society conference to be held in October in Austin, TX. Philippe Schlenker from NYU and David Beaver from Austin will be giving plenaries, too. The special session will be on the “importance of experimental evidence in theories of syntax and semantics, and focus on research that highlights the unique advantages of the experimental environment, as opposed to other sources of data” (from their website). Submit an abstract before May 1st and I see you there.

Perspective paper on second (and third and …) language learning as hierarchical inference

We’ve just submitted a perspective paper on second (and third and …) language learning as hierarchical inference that I hope might be of interest to some of you (feedback welcome).

- Pajak, B., Fine, A.B., Kleinschmidt, D., and Jaeger, T.F. submitted. Learning additional languages as hierarchical probabilistic inference: insights from L1 processing. submitted for review to Language Learning.

We’re building on Read the rest of this entry »

Workshop announcement (Tuebingen): Advances in Visual Methods for Linguistics

This workshop on data visualization might be of interest to a lot of you. I wish I could just hop over the pond.

- Date: 24-Sept-2014 – 26-Sept-2014

- Location: Tuebingen, Germany

- Contact Person: Fabian Tomaschek (contact@avml-meeting.com)

- Web Site: http://avml-meeting.com

- Call Deadlines: 21 March / 18 April

The AVML-meeting offers a meeting place for all linguists from all fields who are interested in elaborating and improving their data visualization skills and methods. The meeting consists of a one-day hands-on workshop Read the rest of this entry »

A few reflections on “Gradience in Grammar”

In my earlier post I provided a summary of a workshop on Gradience in Grammar last week at Stanford. The workshop prompted many interesting discussion, but here I want to talk about an (admittedly long ongoing) discussion it didn’t prompt. Several of the presentations at the workshop talked about prediction/expectation and how they are a critical part of language understanding. One implication of these talks is that understanding the nature and structure of our implicit knowledge of linguistic distributions (linguistic statistics) is crucial to advancing linguistics. As I was told later, there were, however, a number of people in the audience who thought that this type of data doesn’t tell us anything about linguistics and, in particular, grammar (unfortunately, this opinion was expressed outside the Q&A session and not towards the people giving the talks, so that it didn’t contribute to the discussion). Read the rest of this entry »

“Gradience in Grammar” workshop at CSLI, Stanford (#gradience2014)

A few days ago, I presented at the Gradience in Grammar workshop organized by Joan Bresnan, Dan Lassiter , and Annie Zaenen at Stanford’s CSLI (1/17-18). The discussion and audience reactions (incl. lack of reaction in some parts of the audience) prompted a few thoughts/questions about Gradience, Grammar, and to what extent the meaning of generative has survived in the modern day generative grammar. I decided to break this up into two posts. This summarizes the workshop – thanks to Annie, Dan, and Joan for putting this together!

The stated goal of the workshop was (quoting from the website):

For most linguists it is now clear that most, if not all, grammaticality judgments are graded. This insight is leading to a renewed interest in implicit knowledge of “soft” grammatical constraints and generalizations from statistical learning and in probabilistic or variable models of grammar, such as probabilistic or exemplar-based grammars. This workshop aims to stimulate discussion of the empirical techniques and linguistic models that gradience in grammar calls for, by bringing internationally known speakers representing various perspectives on the cognitive science of grammar from linguistics, psychology, and computation.

Apologies in advance for butchering the presenters’ points with my highly subjective summary; feel free to comment. Two of the talks demonstrated

Going full Bayesian with mixed effects regression models

Thanks to some recently developed tools, it’s becoming very convenient to do full Bayesian inference for generalized linear mixed-effects models. First, Andrew Gelman et al. have developed Stan, a general-purpose sampler (like BUGS/JAGS) with a nice R interface which samples from models with correlated parameters much more efficiently than BUGS/JAGS. Second, Richard McElreath has written glmer2stan, an R package that essentially provides a drop-in replacement for the lmer command that runs Stan on a generalized linear mixed-effects model specified with a lme4-style model formula.

This means that, in many cases, you simply simply replace calls to (g)lmer() with calls to glmer2stan():

library(glmer2stan)

library(lme4)

lmer.fit <- glmer(accuracy ~ (1|item) + (1+condition|subject) + condition,

data=data, family='binomial')

summary(lmer.fit)

library(glmer2stan)

library(rstan)

stan.fit <- glmer2stan(accuracy ~ (1|item) + (1+condition|subject) + condition,

data=data, family='binomial')

stanmer(stan.fit)

There’s the added benefit that you get a sample from the full, joint posterior distribution of the model parameters

Read on for more about the advantage of this approach and how to use it.