distributional learning

Speech recognition: Recognizing the familiar, generalizing to the similar, and adapting to the novel

At long last, we have finished a substantial revision of Dave Kleinschmidt‘s opus “Robust speech perception: Recognize the familiar, generalize to the similar, and adapt to the novel“. It’s still under review, but we’re excited about it and wanted to share what we have right now.

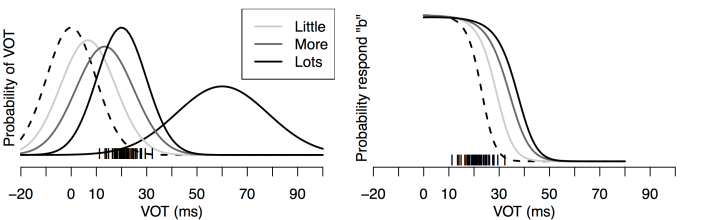

The paper builds on a large body of research in speech perception and adaptation, as well as distributional learning in other domains to develop a normative framework of how we manage to understand each other despite the infamous lack of invariance. At the core of the proposal stands the (old, but often under-appreciated) idea that variability in the speech signal is often structured (i.e., conditioned on other variables in the world) and that an ideal observer should take advantage of that structure. This makes speech perception a problem of inference under uncertainty at multiple different levels Read the rest of this entry »

A few reflections on “Gradience in Grammar”

In my earlier post I provided a summary of a workshop on Gradience in Grammar last week at Stanford. The workshop prompted many interesting discussion, but here I want to talk about an (admittedly long ongoing) discussion it didn’t prompt. Several of the presentations at the workshop talked about prediction/expectation and how they are a critical part of language understanding. One implication of these talks is that understanding the nature and structure of our implicit knowledge of linguistic distributions (linguistic statistics) is crucial to advancing linguistics. As I was told later, there were, however, a number of people in the audience who thought that this type of data doesn’t tell us anything about linguistics and, in particular, grammar (unfortunately, this opinion was expressed outside the Q&A session and not towards the people giving the talks, so that it didn’t contribute to the discussion). Read the rest of this entry »

“Gradience in Grammar” workshop at CSLI, Stanford (#gradience2014)

A few days ago, I presented at the Gradience in Grammar workshop organized by Joan Bresnan, Dan Lassiter , and Annie Zaenen at Stanford’s CSLI (1/17-18). The discussion and audience reactions (incl. lack of reaction in some parts of the audience) prompted a few thoughts/questions about Gradience, Grammar, and to what extent the meaning of generative has survived in the modern day generative grammar. I decided to break this up into two posts. This summarizes the workshop – thanks to Annie, Dan, and Joan for putting this together!

The stated goal of the workshop was (quoting from the website):

For most linguists it is now clear that most, if not all, grammaticality judgments are graded. This insight is leading to a renewed interest in implicit knowledge of “soft” grammatical constraints and generalizations from statistical learning and in probabilistic or variable models of grammar, such as probabilistic or exemplar-based grammars. This workshop aims to stimulate discussion of the empirical techniques and linguistic models that gradience in grammar calls for, by bringing internationally known speakers representing various perspectives on the cognitive science of grammar from linguistics, psychology, and computation.

Apologies in advance for butchering the presenters’ points with my highly subjective summary; feel free to comment. Two of the talks demonstrated