statistical learning

Speech perception and generalization across talkers

We recently submitted a research review on “Speech perception and generalization across talkers and accents“, which provides an overview of the critical concepts and debates in this domain of research. This manuscript is still under review, but we wanted to share the current version. Of couse, feedback is always welcome.

In this paper, we review the mixture of processes that enable robust understanding of speech across talkers despite the lack of invariance. These processes include (i) automatic pre-speech adjustments of the distribution of energy over acoustic frequencies (normalization); (ii) sensitivity to category-relevant acoustic cues that are invariant across talkers (acoustic invariance); (iii) sensitivity to articulatory/gestural cues, which can be perceived directly (audio-visual integration) or recovered from the acoustic signal (articulatory recovery); (iv) implicit statistical learning of talker-specific properties (adaptation, perceptual recalibration); and (v) the use of past experiences (e.g., specific exemplars) and structured knowledge about pronunciation variation (e.g., patterns of variation that exist across talkers with the same accent) to guide speech perception (exemplar-based recognition, generalization).

Speech recognition: Recognizing the familiar, generalizing to the similar, and adapting to the novel

At long last, we have finished a substantial revision of Dave Kleinschmidt‘s opus “Robust speech perception: Recognize the familiar, generalize to the similar, and adapt to the novel“. It’s still under review, but we’re excited about it and wanted to share what we have right now.

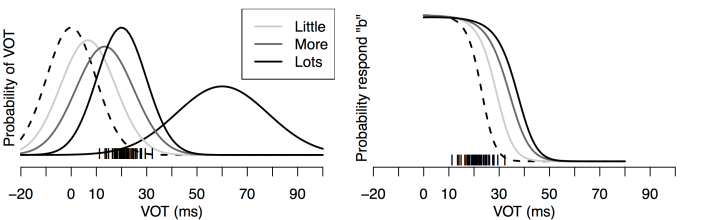

The paper builds on a large body of research in speech perception and adaptation, as well as distributional learning in other domains to develop a normative framework of how we manage to understand each other despite the infamous lack of invariance. At the core of the proposal stands the (old, but often under-appreciated) idea that variability in the speech signal is often structured (i.e., conditioned on other variables in the world) and that an ideal observer should take advantage of that structure. This makes speech perception a problem of inference under uncertainty at multiple different levels Read the rest of this entry »