regression

Updated slides on GLM, GLMM, plyr, etc. available

Some of you asked for the slides to the Mixed effect regression class I taught at the 2013 LSA Summer Institute in Ann Arbor, MI. The class covered some Generalized Linear Model, Generalized Linear Mixed Models, extensions beyond the linear model, simulation-based approaches to assessing the validity (or power) of your analysis, data summarization and visualization, and reporting of results. The class included slides from Maureen Gillespie, Dave Kleinschmidt, and Judith Degen (see above link). Dave even came by to Ann Arbor and gave his lecture on the awesome power of plyr (and reshape etc.), which I recommend. You might also just browse through them to get an idea of some new libraries (such as Stargazer for quick and nice looking latex tables). There’s also a small example to work through for time series analysis (for beginners).

Almost all slides were created in knitr and latex (very conveniently integrated into RStudio — I know some purists hate it, but comm’on), so that the code on the slides is the code that generated the output on the slides. Feedback welcome.

New R resource for ordinary and multilevel regression modeling

Here’ s what I received from the Center of Multilevel Modeling at Bristol (I haven’t checked it out yet; registration seems to be free but required):

The Centre for Multilevel Modelling is very pleased to announce the addition of R practicals to our free on-line multilevel modelling course. These give detailed instructions of how to carry out a range of analyses in R, starting from multiple regression and progressing through to multilevel modelling of continuous and binary data using the lmer and glmer functions. MLwiN and Stata versions of these practicals are already available. You will need to log on or register onto the course to view these practicals. Read More... http://www.cmm.bris.ac.uk/lemma/course/view.php?id=13

More on random slopes and what it means if your effect is not longer significant after the inclusion of random slopes

I thought the following snippet from a somewhat edited email I recently wrote in reply to a question about random slopes and what it means that an effect becomes insignificant might be helpful to some. I also took it as an opportunity to updated the procedure I described at https://hlplab.wordpress.com/2009/05/14/random-effect-structure/. As always, comments are welcome. What I am writing below are just suggestions.

[…] an insignificant effect in an (1 + factor|subj) model means that, after controlling for random by-subject variation in the slope/effect of factor, you find no (by-convention-significant) evidence for the effect. Like you suggest, this is due to the fact that there is between-subject variability in the slope that is sufficiently large to let us call into question the hypothesis that the ‘overall’ slope is significantly different from zero.

[…] So, what’s the rule of thumb here? If you run any of the standard simple designs (2×2, 2×3, 2x2x2,etc.) and you have the psychologist’s luxury of plenty of data (24+item, 24+ subject […]), the full random effect structure is something you should entertain as your starting point. That’s in Clark’s spirit. That’s what F1 and F2 were meant for. […] All of these approaches do not just capture random intercept differences by subject and item. They also aim to capture random slope differences.

[…] here’s what I’d recommend during tutorials now because it often saves time for psycholinguistic data. I am only writing down the random effects but, of course, I am assuming there are fixed effects, too, and that your design factors will remain in the model. Let’s look at a 2×2 design: Read the rest of this entry »

Two interesting papers on mixed models

While searching for something else, I just came across two papers that should be of interest to folks working with mixed models.

- Schielzeth, H. and Forstmeier, W. 2009. Conclusions beyond support: overconfident estimates in mixed models. Behavioral Ecology Volume 20, Issue 2, 416-420. I have seen the same point being made in several papers under review and at a recent CUNY (e.g. Doug Roland’s 2009? CUNY poster). On the one hand, it should be absolutely clear that random intercepts alone are often insufficient to account for violations of independence (this is a point, I make every time I am teaching a tutorial). On the other hand, I have reviewed quite a number of papers, where this mistake was made. So, here you go. Black on white. The moral is (once again) that no statistical procedure does what you think it should do if you don’t use it the way it was intended to.

- The second paper takes on a more advanced issue, but one that is becoming more and more relevant. How can we test whether a random effect is essentially non-necessary – i.e. that it has a variance of 0? Currently, most people conduct model comparison (following Baayen, Davidson and Bates, 2008). But this approach is not recommended (and neither do Baayen et al recommend it) if we want to test whether all random effects can be completely removed from the model (cf. the very useful R FAQ list, which states “do not compare lmer models with the corresponding lm fits, or glmer/glm; the log-likelihoods […] include different additive terms”). This issue is taken on in Scheipl, F., Grevena, S. and Küchenhoff, H. 2008. Size and power of tests for a zero random effect variance or polynomial regression in additive and linear mixed models. Computational Statistics & Data Analysis.Volume 52, Issue 7, 3283-3299. They present power comparisons of various tests.

Mixed model’s and Simpson’s paradox

For a paper I am currently working on, I started to think about Simpson’s paradox, which wikipedia succinctly defines as

“a paradox in which a correlation (trend) present in different groups is reversed when the groups are combined. This result is often encountered in social-science […]”

The wikipedia page also gives a nice visual illustration. Here’s my own version of it. The plot shows 15 groups, each with 20 data points. The groups happen to order along the x-axis (“Pseudo distance from origin”) in a way that suggests a negative trend of the Pseudo distance from origin against the outcome (“Pseudo normalized phonological diversity”). However, this trend does not hold within groups. As a matter of fact, in this particular sample, most groups show the opposite of the global trend (10 out of 15 within-group slopes are clearly positive). If this data set is analyzed by an ordinary linear regression (which does not have access to the grouping structure), the result will be a significant negative slope for the Pseudo distance from origin. So, I got curious: what about linear mixed models?

Tutorial on Regression and Mixed Models at Penn State

Last week (02/3-5/10), I had the pleasure to give the inaugural CLS Graduate Student Young Scientist Colloquium (“An information theoretic perspective on language production”) at the Center for Language Science at Penn State (State College).

I also gave two 3h-lectures on regression and mixed models. The slides for Day 1 introduce linear regression, generalized linear models, and generalized linear mixed models. I am using example analyses of real psycholinguistic data sets from Harald Baayen’s languageR library (freely available through the free stats package R). The slides for Day 2 go through problems and solutions for regression models. For more information have a look at the online lectures available via the HLP lab wiki. I’ve uploaded the pdf slides and an R script. There also might be a pod cast available at some point. Feedback welcome. I’ll be giving a similar workshop at McGill in May, so watch for more materials.

I had an intensive and fun visit, meeting with researchers from Psychology, Communication and Disorders, Linguistics, Spanish, German, etc. I learned a lot about bilingualism (not only though) and a bit about anticipatory motor planning. So thanks to everyone there who helped to organize the visit, especially Jorge Valdes and Jee Sook Park. And thanks to Judith Kroll for the awesome cake (see below). Goes without saying that it was a pleasure meeting the unofficial mayor of State College, too ;). See you all at CUNY! Read the rest of this entry »

Multinomial random effects models in R

This post is partly a response to this message. The author of that question is working on ordered categorical data. For that specific case, there are several packages in R that might work, none of which I’ve tried. The most promising is the function DPolmm() from DPpackage. It’s worth noting, though, that in that package you are committed to a Dirichlet Process prior for the random effects (instead of the more standard Gaussian). A different package, mprobit allows one clustering factor. This could be suitable, depending on the data set. MNP, mlogit, multinomRob, vbmp, nnet, and msm all offer some capability of modeling ordered categorical data, and it’s possible that one of them allows for random effects (though I haven’t discovered any yet). MCMCpack may also be useful, as it provides MCMC implementations for a large class of regression models. lrm() from the Design package handles ordered categorical data, and clustered bootstrap sampling can be used for a single cluster effect.

I’ve recently had some success using MCMCglmm for the analysis of unordered multinomial data, and want to post a quick annotated example here. It should be noted that the tutorial on the CRAN page is extremely useful, and I encourage anyone using the package to work through it.

I’m going to cheat a bit in my choice of data sets, in that I won’t be using data from a real experiment with a multinomial (or polychotomous) outcome. Instead, I want to use a publicly available data set with some relevance to language research. I also need a categorical dependent variable with more than two levels for this demo to be interesting. Looking through the data sets provided in the languageR package, I noticed that the dative data set has a column SemanticClass which has five levels. We’ll use this as our dependent variable for this example. We’ll investigate whether the semantic class of a ditransitive event is influenced by the modality in which it is produced (spoken or written).

library(MCMCglmm)

data("dative", package = "languageR")

k <- length(levels(dative$SemanticClass))

I <- diag(k-1)

J <- matrix(rep(1, (k-1)^2), c(k-1, k-1))

m <- MCMCglmm(SemanticClass ~ -1 + trait + Modality,

random = ~ us(trait):Verb + us(Modality):Verb,

rcov = ~ us(trait):units,

prior = list(

R = list(fix=1, V=0.5 * (I + J), n = 4),

G = list(

G1 = list(V = diag(4), n = 4),

G2 = list(V = diag(2), n = 2))),

burnin = 15000,

nitt = 40000,

family = "categorical",

data = dative)

Read on for an explanation of this model specification, along with some functions for evaluating the model fit.

Multilevel model tutorial at Haskins lab

Austin Frank and I just gave a 2×3 hours workshop on multilevel models at Haskins Lab (thanks to Tine Mooshammer for organizing!). We had a great audience with a pretty diverse background (ranging from longitudinal studies on nutrition, over speech researchers, clinical studies, and psycholinguists, to fMRI researchers), which made for lots of interesting conversations on topics I don’t usually get to think about. Thanks to everyone attending =). We had a great time.

We may post the recordings once we receive them, if it turns out they may be useful. But for now, here are many of the slides we used, a substantial subset of which were created by Roger Levy (UC San Diego) and/or in collaboration with Victor Kuperman (Stanford University) for WOMM’09 at the CUNY Sentence Processing Conference, as indicated on the slides. No guarantees for the R-code and please do not distribute (rather: refer to this page) and ask before citing.

- Conceptual intro to Generalized Linear Models and Generalized Linear Mixed (a.k.a multilevel) models (based on WOMM’09 slides by Roger Levy with minimal changes by Florian Jaeger)

- Common issues in ordinary and multilevel regression modeling (based on Florian Jaeger & Victor Kuperman’s WOMM’09 slides plus some additional materials).

- Additional issues: multiple post-hoc comparisons and random effect ex/in-clusion (Austin Frank)

Questions and comments welcome, preferably using the comment box at the bottom of this page. R related questions should be send to the very friendly email support list for language researchers using R (see R-lang link in the navigation bar to the right).

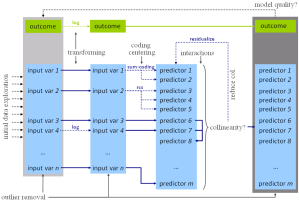

One slide on developing a regression model with interpretable coefficients

While Victor Kuperman and I are preparing our slides for WOMM, I’ve been thinking about how to visualize the process from input variables to a full model. Even though it involves many steps that hugely depend on the type of regression model, which in turn depends on the type of outcome (dependent) variable, there are a number of steps that one always needs to go through if we want interpretable coefficient estimates (as well as unbiased standard error estimates for those coefficients).

In eigener Sache: LSA 2009 meeting

Unfortunately, I was only able to attend the first day of the LSA meeting this year, but it was good being there (ran into lots of interesting folks and saw a couple of good talks). Ting gave his presentation on Constant Entropy Rate in Mandarin Chinese and he was a real pro ;). Read the rest of this entry »

Pre-cuny workshop on regression and multilevel modeling (cntd)

Some time ago, I announced that some folks have been thinking about organizing a small workshop on common issues and standards in regression modeling (including multilevel models) in psycholinguistic research to be held the day before CUNY 2009 (i.e. 03/25 at UC Davis). Here’s an update on this “workshop” along with some thoughts for planning. Read the rest of this entry »

Mini-tutorial on regression and mixed (linear & logit) models in R

This summer, Austin Frank and I organized a six 3h-session tutorial on regression and mixed models. It is posted on our HLP lab wiki and consists out of reading suggestions and commented R scripts that we went through in class. Among the topics (also listed for each session on the wiki) are:

- linear & logistics regression

- linear & logit mixed/multilevel/hierarchical models

- model evaluation (residuals, outliers, distributions)

- collinearity tests and dealing with collinearity

- coding of variables (contrasts)

- visualization

We used both Baayen’s 2008 textbook Analyzing Linguistic Data: A Practical Introduction to Statistics using R (available online) and Gelman and Hill’s 2007 book on Data Analysis using Regression and Multilevel/Hierarchical Models, both of which we can recommend (they also complement each other nicely). If you have questions about this class or you have suggestions for improvement, please send us an email or leave a comment to this page (we’ll get notified).